Dask jobqueue on Levante#

According to the official Web site, Dask jobqueue can be used to

deploy Dask on job queuing systems like PBS, Slurm, MOAB, SGE,

LSF, and HTCondor. Since the queuing system on Levante is Slurm, we are

going to show how to start a Dask cluster there. The idea is simple as

described here. The difference is that the workers can be distributed

through multiple nodes from the same partition. Using Dask jobqueue you can launch

Dask cluster/workers as a Slurm jobs. In this case, Jupyterhub will play an interface role and the Dask

can use more than the allocated resources to your jupyterhub session

(profiles).

How to start a Dask cluster using SLURMCluster#

Load the required clients

from dask_jobqueue import SLURMCluster

from dask.distributed import Client

Set up the cluster

cluster = SLURMCluster(name='dask-cluster',

cores=1,

memory='16GB',

processes=1,

interface='ib0',

queue='',

project='',

walltime='12:00:00',

asynchronous=0)

The important parameters are project, queue and interface.

The others can be configured dependending on the target partition.

Start the cluster and client

client = Client(cluster)

client

Add workers

cluster.scale(10)

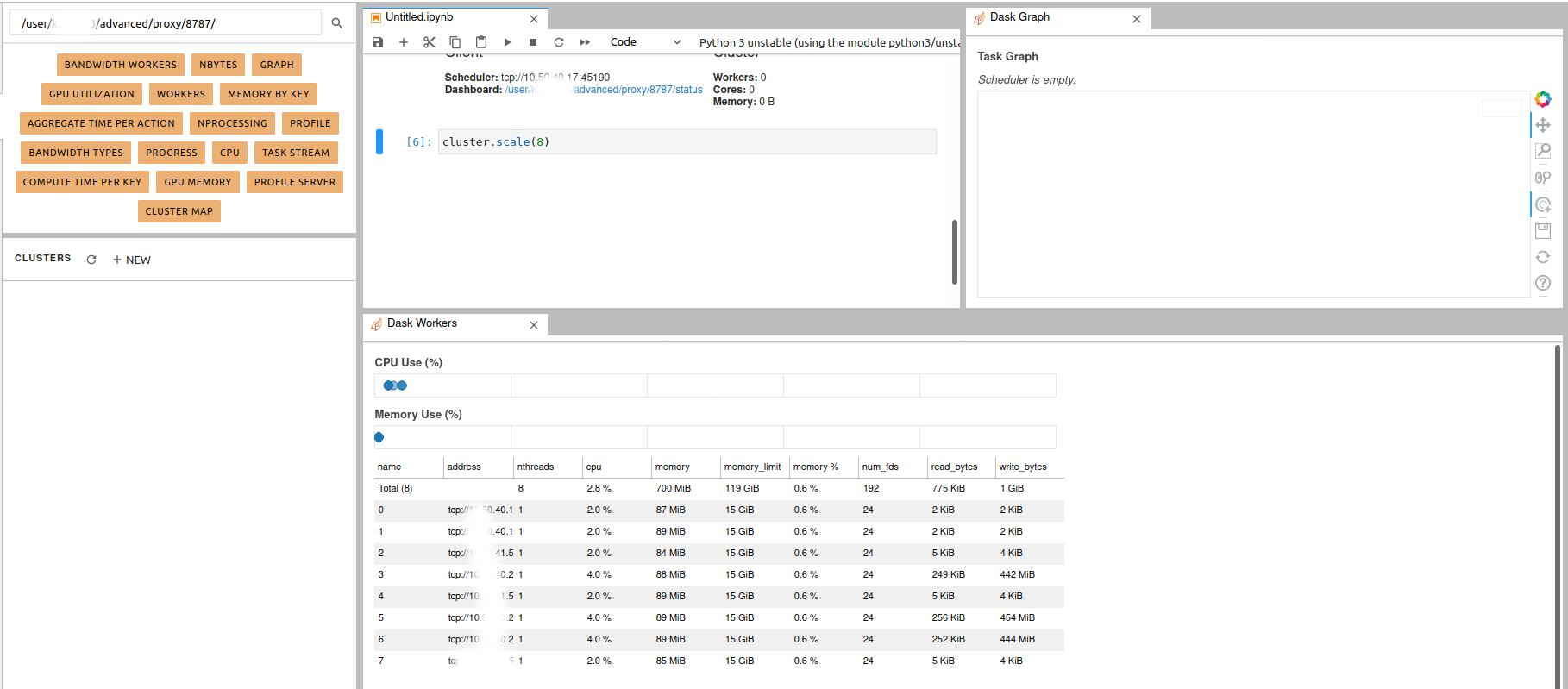

Dask dashboard#

The Dask dashboard works well without any further modification to the

config files as described here. You can even switch between the

dashboards by modifying the port in the dashboard link.

Note

Do not forget to close and shutdown the Dask cluster when you

finish your work. You can use close() and shutdown().